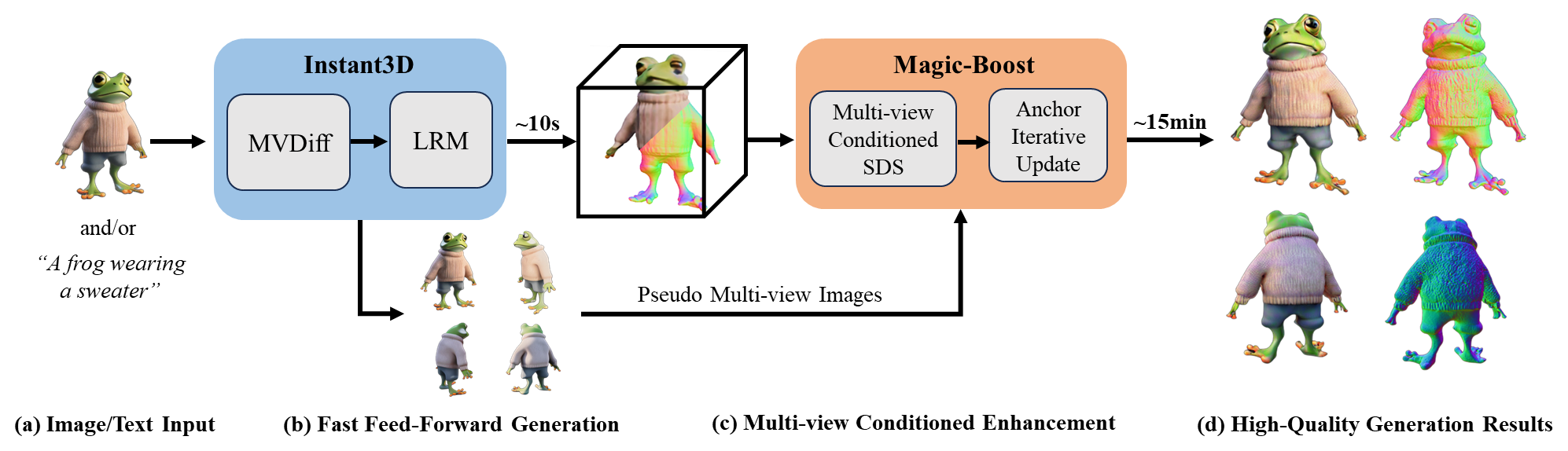

Benefiting from the rapid development of 2D diffusion models,

3D content creation has made significant progress recently.

One promising solution involves the fine-tuning of pre-trained

2D diffusion models to harness their capacity for producing

multi-view images, which are then lifted into accurate 3D models

via methods like fast-NeRFs or large reconstruction models.

However, as inconsistency still exists and limited generated resolution,

the generation results of such methods still lack intricate textures and

complex geometries. To solve this problem, we propose Magic-Boost,

a multi-view conditioned diffusion model that significantly refines

coarse generative results through a brief period of SDS optimization

(~15min). Compared to the previous text or single image based diffusion

models, Magic-Boost exhibits a robust capability to generate images with

high consistency from pseudo synthesized multi-view images. It provides

precise SDS guidance that aligns with the identity of the input images,

thereby enriching the local detail in both geometry and texture of the

initial generative results. Extensive experiments show Magic-Boost greatly

enhances the coarse inputs and generates high-quality 3D assets with rich

geometric and textural details.

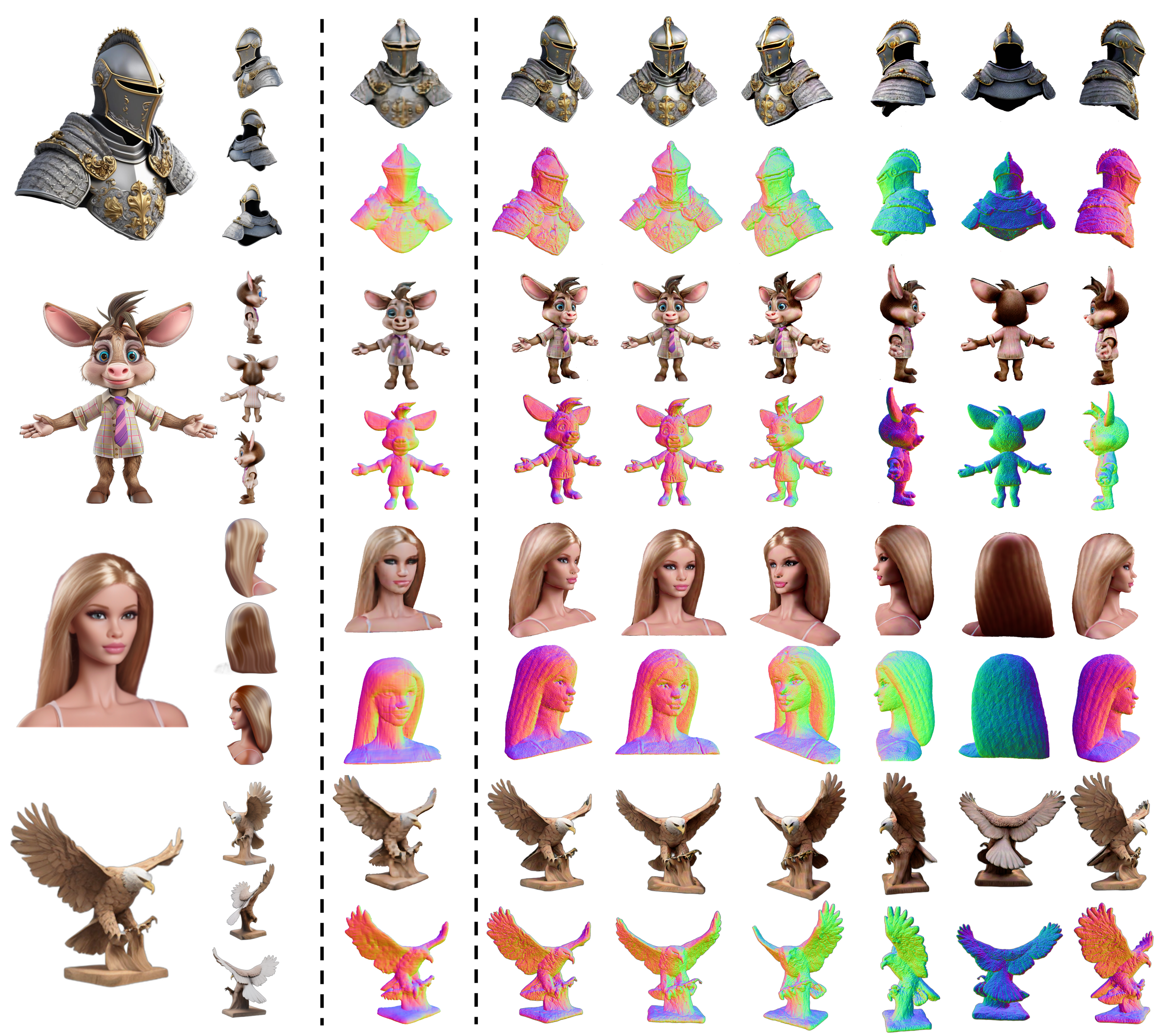

Figure 1. Provided with an input image and its coarse 3D generation, MagicBoost

effectively boosts it to a high-quality 3D asset within 15 minutes.

From left to right, we show the input image, pesudo multi-view images and coarse

3D results from Instant3D, together with the significantly improved results

produced by our method.